Generating secure randomness on Ethereum using SNARKs

Jan 12, 2023 | amangottumukkala, sinasabet, Georgios Konstantopoulos

Contents

Introduction

In this post, we propose designs and reference implementations that utilize SNARKs and VDFs to achieve fully secure randomness on Ethereum.

Decentralized applications on Ethereum like NFT mints, lotteries, games, and more require secure randomness so their contracts are fair. State-of-the-art randomness services meet some of the requirements for secure randomness but still have flaws, such as cost, biasability, and liveness.

What makes randomness secure?

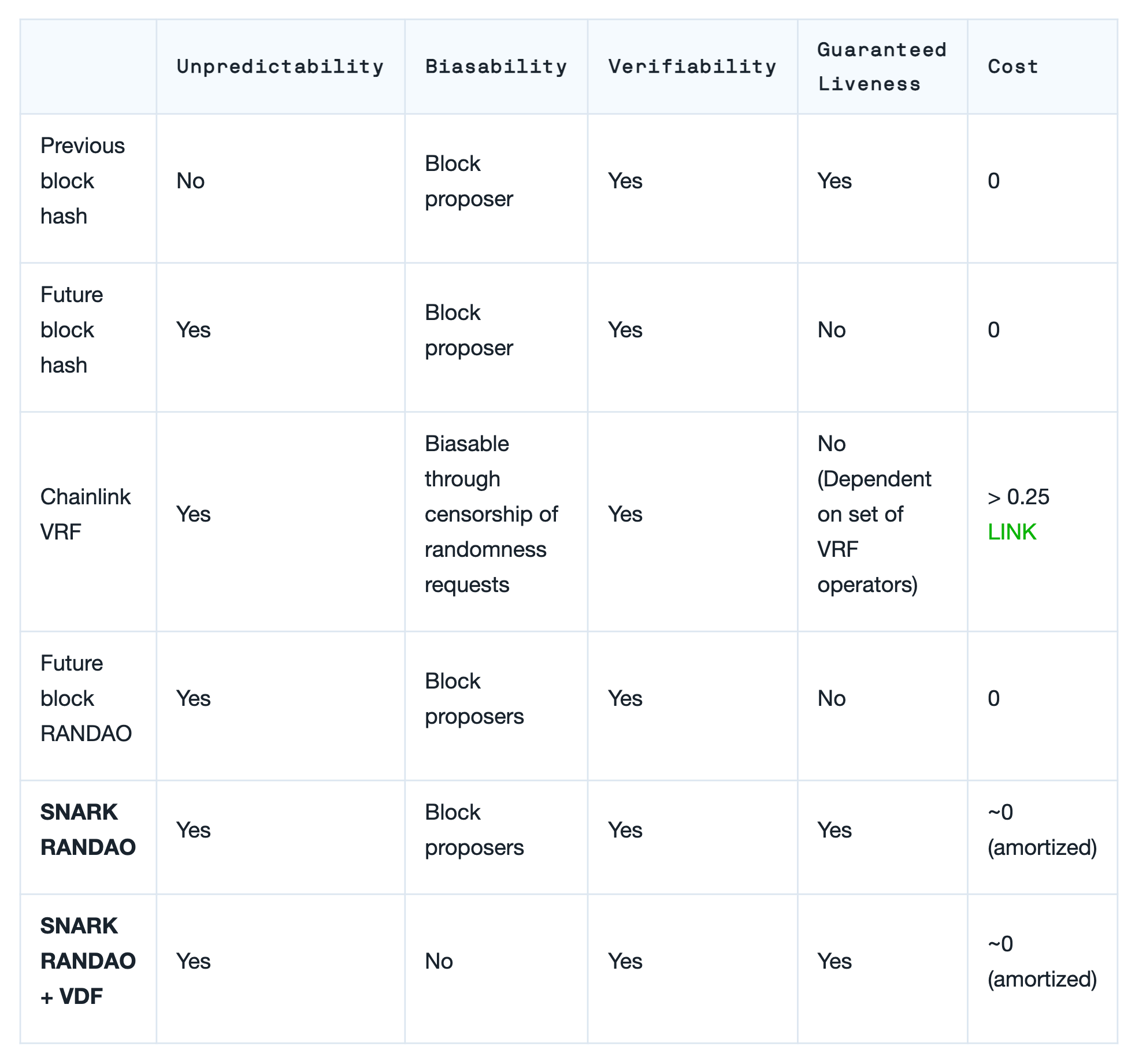

In order for a randomness source to be secure, it needs to have the following four properties:

- Unpredictable: Random value can’t be anticipated.

- Unbiasable: Actors contributing to randomness generation can’t meaningfully bias the result.

- Verifiable: Any interested actor can verify the integrity of the random number’s generation.

- Live: Randomness requests can be fulfilled at any future point in time without requiring any particular actor's participation, assuming the liveness of the base layer.

Additionally, an ideal randomness source should be inexpensive or free for consumers in order to be useful.

See our contributions in bold in the table below:

What has been done before?

Using a Block's Hash

Block hashes are a simple, zero-delay mechanism for retrieving pseudo-random values and are suitable for low-stakes environments, but are not secure.

// Use the previous block's hash as randomness.

function coinflip() view external returns (bool) {

return uint256(blockhash(block.number-1)) % 2 == 0;

}

Historical block hashes are predictable since they’ve been publicly exposed to the entire network. If an attacker can predict the random value that will be used, they may be able to determine and manipulate the outcome of a specific situation, like the outcome of a randomized lottery. For this reason, secure randomness beacons commit to a future unknown value, like a future block hash, to ensure unpredictability.

For the remainder of this blog post, all randomness beacon constructions require committing to future, unknown values even when not stated explicitly.

The below unoptimized example demonstrates the concept of committing to a future block:

contract RandomnessProvider {

/// Stores the block a request is committed to.

mapping(bytes32 => uint256) public revealBlock;

/// User requests randomness for a future block along with a request id.

function commitRandomness(bytes32 _requestId, uint256 _revealBlock) external {

require(_revealBlock > block.number, "Must commit to a future block");

require(revealBlock[_requestId] == 0, "Request already submitted");

revealBlock[_requestId] = _revealBlock;

}

/// Returns the blockhash of the block after checking that the request's target

/// block has been reached.

function fetchRandomness(bytes32 _requestId) public view returns (uint256) {

bytes32 randomnessBlock = revealBlock[_requestId];

require(block.number > randomnessBlock, "Request not ready");

require(block.number <= randomnessBlock + 256, "Request expired");

return blockhash(randomnessBlock);

}

}

The above scheme is unpredictable and verifiable, but it is biasable because the block proposer for a given slot can directly manipulate the block’s hash by manipulating the block’s body.

It also faces a liveness issue due to a limitation of the BLOCKHASH opcode. The BLOCKHASH opcode only has access to the last 256 block hashes, which requires applications to use the committed block hash within a limited time range.

Chainlink VRF

Chainlink’s oracles provide unpredictable and verifiable random values on-chain using a Verifiable Random Function (originally proposed by Micali et al, cryptography ELI5).

However, biasability still exists because a Chainlink VRF operator could censor a request via omission if the generated randomness is unfavorable. The cost to bias can be increased by introducing slashing (to be deployed by Chainlink), but there still may exist a random value used in a system using the VRF which may make biasing a profitable strategy. Participation in the VRF operator set would ideally be permissionless, although that may also be addressed via staking/slashing.

Separately, integrating with the Chainlink VRF would ideally be free/amortizable over many requests, whereas the current design incurs additional gas overheads and native fees to align the incentives of node operators.

Beacon Chain RANDAO

RANDAO is an Ethereum-native pseudorandom value produced via a cryptoeconomic commit-reveal game played by Ethereum’s block proposers. To explain in more detail, RANDAO is an accumulator that collects randomness from block proposers. In every block, a proposer includes a deterministic value known as the randao_reveal which represents their individual contribution to the overall RANDAO value. The new RANDAO value is determined by mixing the previous RANDAO value with the randao_reveal of the proposed block, which is showcased in the following code snippet.

def process_randao(state: BeaconState, body: BeaconBlockBody) -> None:

epoch = get_current_epoch(state)

# Verify RANDAO reveal

proposer = state.validators[get_beacon_proposer_index(state)]

signing_root = compute_signing_root(epoch, get_domain(state, DOMAIN_RANDAO))

assert bls.Verify(proposer.pubkey, signing_root, body.randao_reveal)

# Mix in RANDAO reveal

mix = xor(get_randao_mix(state, epoch), hash(body.randao_reveal))

state.randao_mixes[epoch % EPOCHS_PER_HISTORICAL_VECTOR] = mix

Credit to Ben Edgington, code snippet taken from here.

The process_randao function first verifies the randao_reveal before mixing it. The randao_reveal is designed to be deterministic, so block proposers cannot contribute arbitrary values that would bias the resulting RANDAO value. The randao_reveal for a slot must be the block proposer's signature of the epoch number for the slot they are assigned to, which commits them to a single, allowed contribution of randomness. If a block proposer creates a block with a different randao_reveal, that block is considered invalid and the value is not mixed.

While block proposers cannot choose arbitrary randao_reveal values to bias the RANDAO, they can choose not to propose a block if the resulting RANDAO is unfavorable. In this case, the next block will be created by the next block proposer, which will result in a different RANDAO value due to the new block proposer's contribution of a different randao_reveal. This ability to omit a block gives block proposers the option to "reroll" a randomness result, which can be unfair. This is a form of bias through omission and is commonly referred to as bits of influence over the RANDAO.

By combining RANDAO with the "commit-to-future-value" technique from the block hash example, we can create a source of randomness that is both unpredictable and verifiable while also eliminating the dependency on third-party networks like Chainlink. Additionally, this approach leads to a random value that is less susceptible to bias compared to simply using the block hash. However, it is important to note that using a future RANDAO alone is still prone to bias and presents a liveness issue.

Biasability

As previously mentioned, block proposers can choose to omit a block if the resulting RANDAO value is unfavorable.

Liveness

The RANDAO value can currently be accessed on the execution layer via the DIFFICULTY opcode, which returns the RANDAO for only the block the transaction is being included in.

// Returns the RANDAO of the block this function is called in.

// For example if a transaction calls this function and is included in

// block 10 the RANDAO of block 10 is returned.

function getRandomValue() internal returns (uint256) {

return block.difficulty;

}

This straightforward method of utilizing the RANDAO of a block directly eliminates the problem of liveness, as the RANDAO value will always be accessible. However, it still presents issues of predictability similar to those encountered when using historical block hashes. To address this predictability problem, we can commit to the RANDAO of a future block and reference it once the block is proposed and the RANDAO is made available.

However, using a previously committed-to future RANDAO block introduces a liveness issue, because the DIFFICULTY opcode only returns the current RANDAO value. This necessitates a transaction to be included in the exact block that was previously committed to, which can be hard to guarantee and cannot be recovered if missed.

Can we do better

Lifting The Same Block DIFFICULTY Opcode Constraint

As previously mentioned, accessing the RANDAO through the DIFFICULTY opcode is not practical because transactions that consume randomness must be included in the same block they previously committed to. In this section, we will explore ways to overcome this constraint.

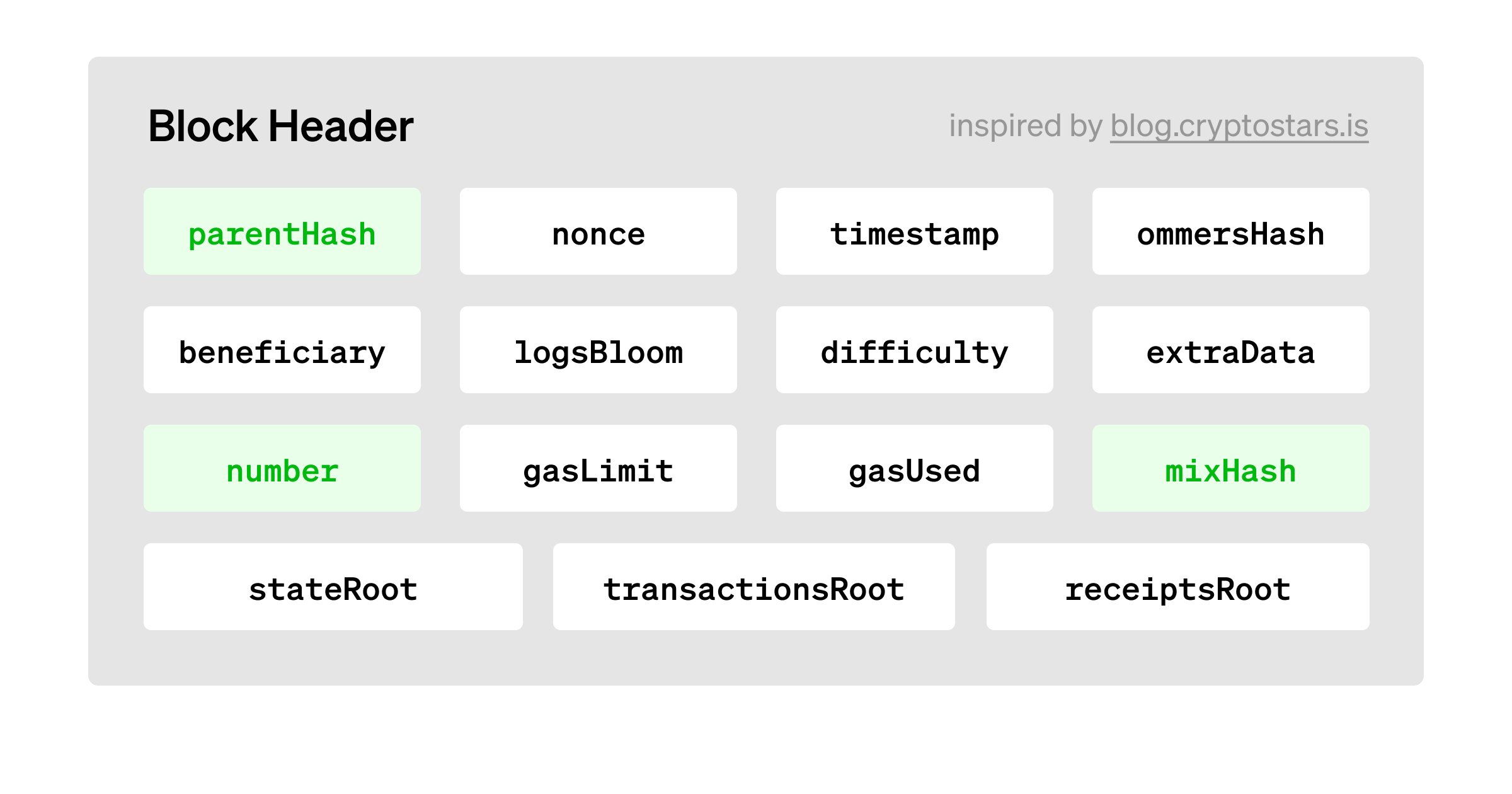

One alternative method for accessing the RANDAO is through the mixHash field in a block header. A block header is a data structure that contains metadata about a block, such as its height (number), the hash of the previous block (parentHash), the RANDAO value (mixHash), and more.

Instead of relying on the DIFFICULTY opcode, we can create a construction that allows us to verify and reference historical RANDAO values by referencing historical block header data.

To verify historical block header data, one can leverage the block hash of the relevant block. Block hashes act as commitments to the relevant block header data and are unique identifiers for a block. One can verify the integrity of a block and its contents using a block hash, as any changes to the block header data will result in a different block hash.

To derive a block hash from a block header on Ethereum, you can:

- Obtain the block header data for a specific block.

- RLP (Recursive Length Prefix) encode the block header data into a binary string.

- Use Keccak256 to hash the RLP encoded block header and compute the block hash.

Here's a sample function that attests to a historical RANDAO using a block header and its block hash:

function submitRANDAO(bytes memory rlpEncodedHeader) external {

// Validate blockhash is a valid historical blockhash.

bytes32 blockHash = keccak256(rlpEncodedHeader);

if (!isValidHistoricalBlockhash(blockHash)) {

revert BlockhashUnverified(blockHash);

}

// Decode RLP encoded block header.

RLPReader.RLPItem[] memory ls = rlpEncodedHeader.toRlpItem().toList();

// Extract out mixhash (RANDAO) from block header.

uint256 RANDAO = ls[13].toUint();

// Stores RANDAO value at this block number.

blockNumToRANDAO[blockNum] = RANDAO;

}

The function first hashes the inputted RLP encoded block header with Keccak256 and verifies that the computed block hash references a valid historical block. If this check were not in place, it would be possible to pass in a fake block header from the past and falsely attest to historical block header data. After validating the block header, the function then RLP decodes the encoded block header and stores the RANDAO value in storage for anyone to reference in the future.

There are open-source Solidity libraries available for RLP decoding in smart contracts. However, verifying an arbitrary historical block hash as a real historical block hash on-chain is a more complex task.

The simplest way to verify a historical block hash is the BLOCKHASH opcode which allows contracts to reference the most recent 256 block hashes. This allows us to attest to any block header data within the last 256 blocks and lifts the same block lookback limit presented by the DIFFICULTY opcode. While the BLOCKHASH opcode allows us to reference more RANDAO values than the DIFFICULTY opcode, it does not fully solve the issue of liveness during times of censorship or congestion. In such cases, transactions using the BLOCKHASH opcode may be included too far from the block for which they require a hash.

To create a fully live randomness beacon, we need a construction that does not have a hard lookback limit of 256 blocks. In the remainder of this blog post, we refer to constructions that verify historical block hashes as “block hash oracles”.

Lifting the 256 Block Hash Lookback Limit

Block Hash Oracle Contract via BLOCKHASH Opcode

One way to overcome the limited lookback range of the BLOCKHASH opcode is to create a contract specifically designed for storing historical block hashes while still using the BLOCKHASH opcode. This allows consumers to reference a wider range of historical block header data without significantly increasing complexity.

/// @notice Maps validated blockhashes to their block number.

mapping(bytes32 => uint256) public blockhashToBlockNum;

/// @notice Validates the block hash of the block before this tx is called.

function poke() public {

blockhashToBlockNum[blockhash(block.number - 1)] = blockNum;

}

/// @notice Validates the block hash of a specified block number using

/// the blockhash opcode. The blockhash opcode is currently limited to

/// 256 blocks in the past.

/// @param blockNum Block number to validate.

function pokeBlocknum(uint256 blockNum) public {

bytes32 blockhashVal = blockhash(blockNum);

if (blockhashVal == bytes32(0)) {

revert PokeRangeError();

}

blockhashToBlockNum[blockhashVal] = blockNum;

}

With even just a single party fulfilling block hash requests, once they're stored in the contract any consumer can reference the value at any point in the future. However, the use of the BLOCKHASH opcode still imposes a limit on the number of blocks that can be referenced, making it possible for a requested block hash to miss its window and never be stored in the contract. As a result, this approach does not provide full liveness, even though it allows for greater access to block hashes.

Block Hash Oracle Contract via SNARKs

It is theoretically possible to reference blocks arbitrarily far back in history due to the inclusion of a reference to the parent block's hash in the block header. However, proving a historical block hash through sequential proofs of parent hashes grows linearly the further back in history you go, and can quickly become prohibitively expensive. One way to address this issue is to use SNARKs, which have the property of succinctness, to create a constant-sized proof for a historical block hash. Using these history proofs, it becomes feasible to create a fully live and robust block hash oracle.

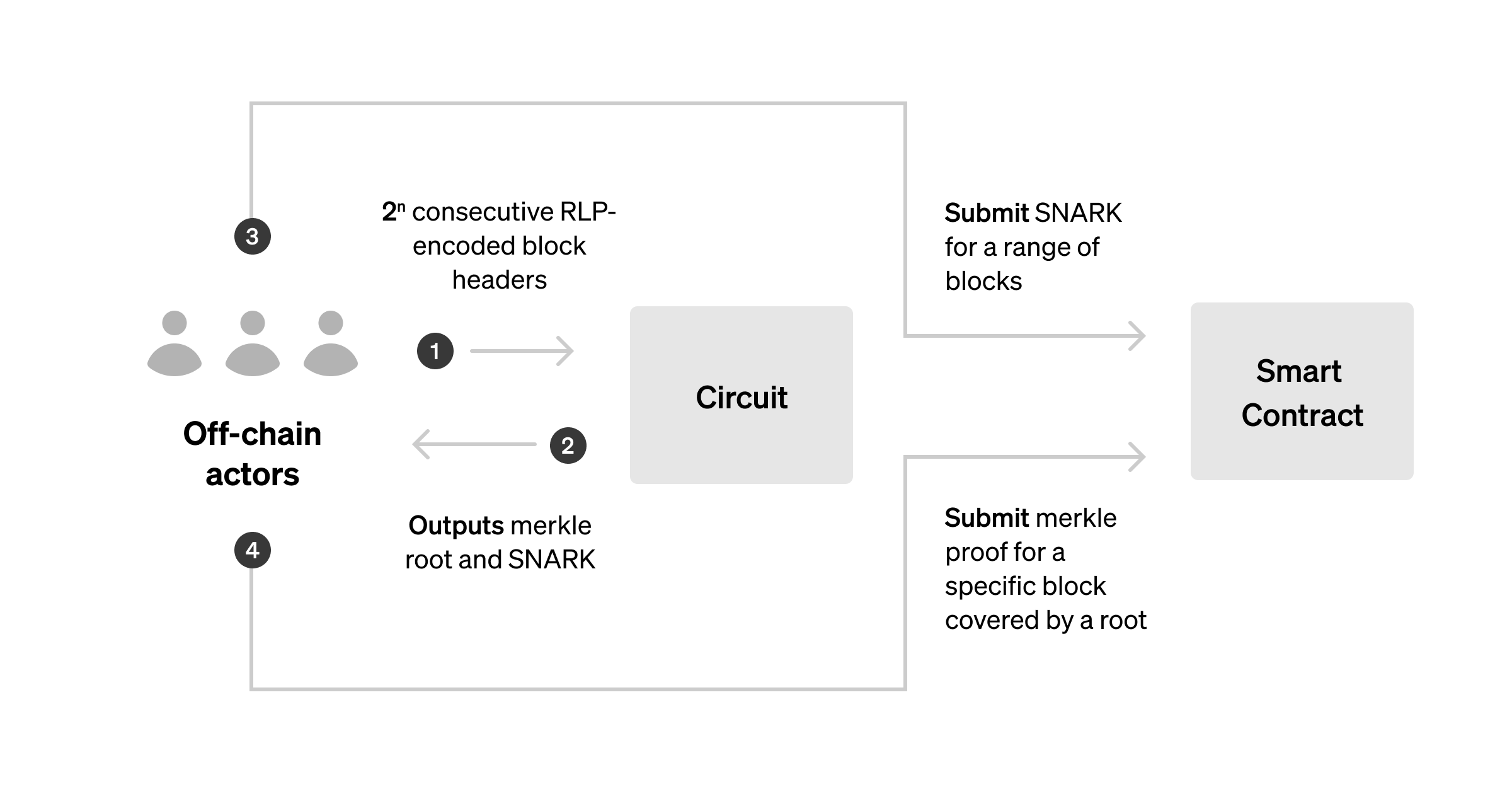

One example of a method for extending the BLOCKHASH opcode oracle is to create a SNARK that proves a merkle root represents a commitment of ordered block hashes that reference each other through the parentHash in their respective block's header, with the last block hash in the list being the most recent.

A SNARK that proves the merkle root representing a valid, ordered list of block hashes can be generated by an off-chain prover and sent to a verifier smart contract. The contract then verifies the proof which ensures the merkle root matches the commitment scheme outlined above, and separately confirms that the last block hash in the list corresponds to an existing block hash that the contract has previously validated. Without anchoring the list to a previously validated block hash, it would be possible to attest to an arbitrary list of sequential block headers that does not accurately reflect the true state of Ethereum. If the proof and the last block in the list are confirmed to be valid, the contract cements the merkle root of block hashes in storage. Using this construction, any RANDAO value in the covered range can be fulfilled.

With this method, we can conservatively attest to ~1024 block hashes (n=10) within a single proof, with potential to do more as proving systems continue to mature. We can feasibly look arbitrarily far back in history by repeating this process until we cover the range we want to access.

Assuming these components are open sourced, any actor can plausibly keep the system live by generating and submitting a proof for large intervals of block headers.

We now have a SNARK powered block hash oracle with strong liveness guarantees, but there’s still the issue of RANDAO's biasability. We address solutions in the next section.

Removing bias with VDFs

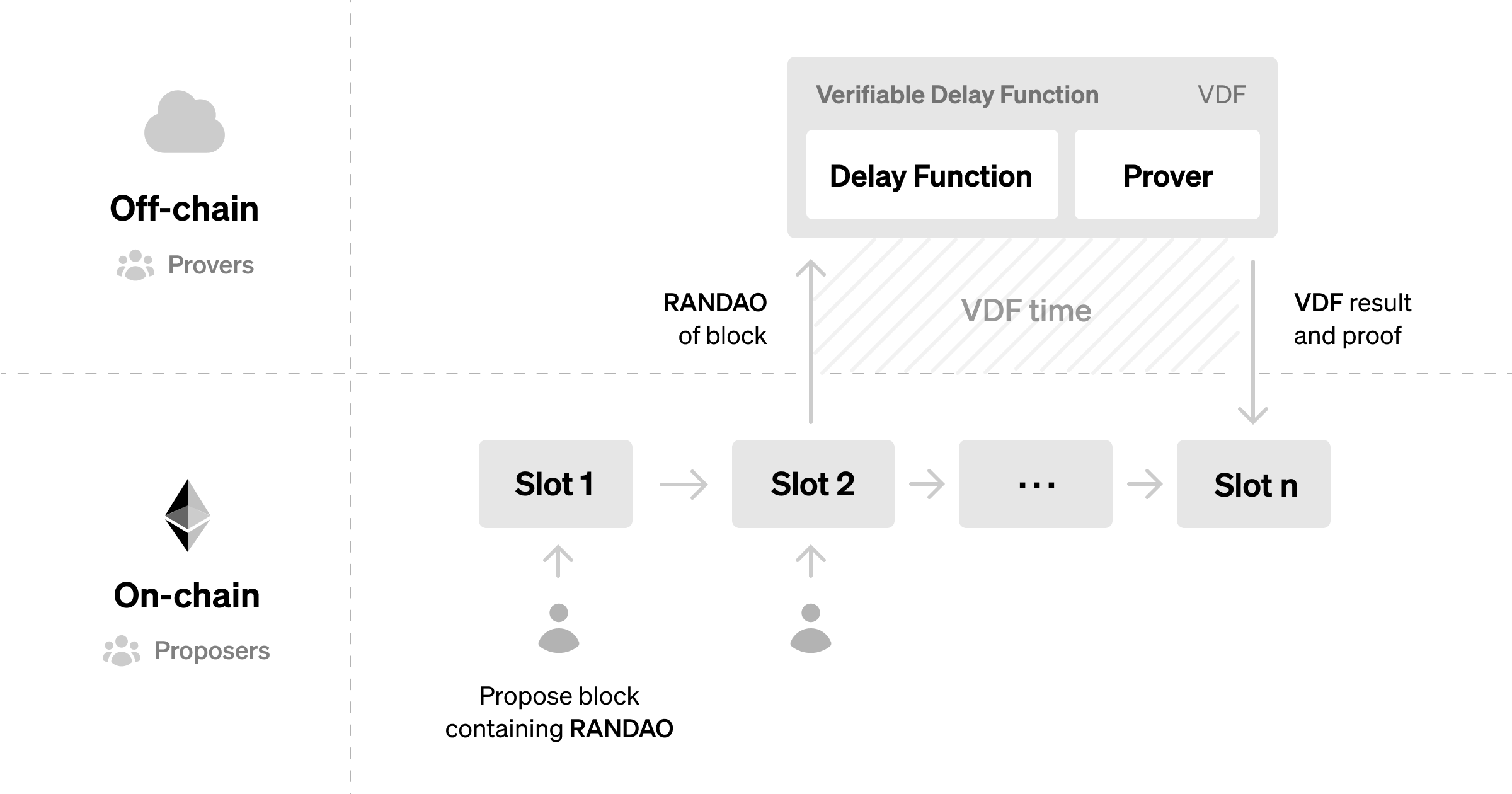

As seen in the process_randao code snippet above, calculating a new RANDAO value is essentially just an xor operation. RANDAO can be biased because a block proposer at their assigned slot can quickly calculate the result of the xor operation, predict the outcome of their contribution to the randomness generation process, and choose not to propose their block if the outcome is unfavorable. A verifiable delay function can be applied on top of RANDAO to ensure block proposers can’t know the result of their contribution within their allotted time to contribute, which means their ability to skip their slot cannot bias the randomness result.

Verifiable Delay Functions (VDF), proposed by Boneh et al are cryptographic functions that require a certain amount of time to compute its output (the "delay"), but can be easily verified once it is produced (the "verifiable"). Examples of VDFs are iteratively hashing an input with SHA256 or iteratively algebraically transforming it with MinRoot, to compute the output. VDFs are designed to be computationally intensive and can only be performed sequentially, meaning that it is difficult for an adversary with access to parallel processing to compute the output significantly faster than an honest actor with sufficient hardware.

We can combine two previous ideas, Justin Drake’s RANDAO+VDF construction and VDFs like VeeDo from StarkWare or Nova-powered minRoot VDF to create unbiasable randomness for the execution layer. An off-chain actor runs the VDF computation using a block’s RANDAO value as input and is returned a proof of execution along with the result (uint256), which is used as the random value. The proof and returned random value are submitted to a verifier contract which verifies the VDF proof and stores the random value in perpetuity for anyone to reference. Crucially, anyone can fill the role of running the VDF, proving historical block hashes, and submitting relevant proofs on-chain.

While this construction theoretically grants us secure randomness, VDFs aren’t that simple in practice. It is difficult to quantify the absolute minimum time a VDF can be run, which is crucial for deploying a production, unbreakable VDF. VDFs are an active area of research and have additional implications on UX and security in Ethereum.

What did we do?

Randomness Beacon powered by RANDAO and SNARK Block Hash Oracles

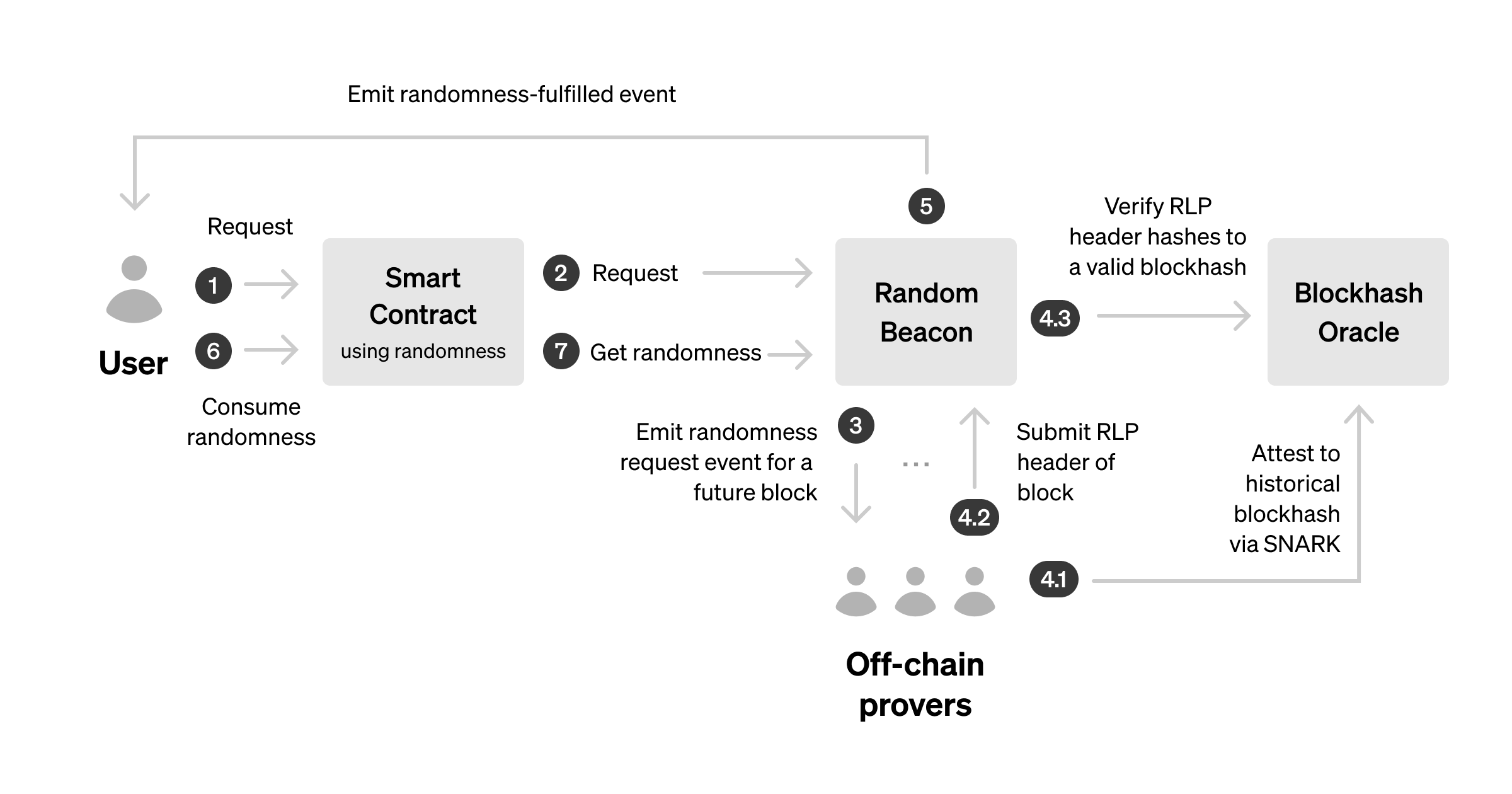

By combining SNARKs and VDFs, we developed a simple, ETH native, VDF-compatible randomness beacon powered by RANDAO and pluggable block hash oracles. We designed it to mimic existing randomness request flows as closely as possible.

/// @notice Interface for contracts providing randomness.

interface IRandomnessProvider {

/// @notice Emits a randomness request for a specific block.

event RandomnessRequested(address indexed requester, uint256 indexed randomnessBlock);

/// @notice Emits the fullfillment of ranndomness at a specific block.

event RandomnessFulfilled(uint256 indexed fulfilledBlock, uint256 randomSeed);

/// @notice Requests randomness and returns the block number tied to the request.

function requestRandomness() external returns (uint256);

/// @notice Requests randomness from a specific block.

function requestRandomnessFromBlock(uint256 blockNum) external;

/// @notice Returns >= 1 random values from a specific block.

function fetchRandomness(uint256 blockNum, uint256 numberRandomValues) external view returns (uint256[] memory);

}

We wrote a randomness beacon interface, a working RANDAO-based beacon, and a VDF reference implementation using StarkWare's VeeDo VDF (not production ready) available on our Github.

/// @notice Interface that all blockhash oracles must implement

/// so RandomnessProvider can plug in.

interface IBlockhashOracle {

/// @notice Returns the nonzero, accurate block number

/// if the blockhash is verified.

function blockHashToNumber(bytes32 hash) external view returns (uint256);

}

We've also written a block hash oracle interface, blockhash opcode-based block hash oracle, circuit that proves historical block hashes, and a SNARK-based block hash oracle that verifies proofs and attests to historical block hashes on-chain.

These contracts are proof-of-concepts, but are prepared to serve as a foundation for use once VDFs are production-ready.

The contracts and circuits are unoptimized, unaudited, and experimental — use at your own risk! Issues and pull requests are welcome.

Future Work

There is plenty to do and many ways to contribute. Here are a few ideas we invite you to work on.

- Prover Incentives: Design an efficient mechanism that minimizes the cost of randomness for users while incentivizing relays to fulfill requests.

- Block Hash Oracle: Create an efficient, production block hash oracle that implements our

IBlockhashOracleinterface. - VDF: Write a Solidity verifier contract for a Nova SNARK VDF.

- Noir Libraries (Emerging Domain Specific Language for SNARK proving systems)

- RLP utilities

- Implement history proof circuit (eg. block hash oracle)

- Implement a VDF

Conclusion

While there are a variety of flavors of randomness on Ethereum, we’ve found that many of them have subtle flaws such as biasability, liveness, unnecessary costliness, or additional trust assumptions.

To address these issues, we propose potential designs uniquely enabled by SNARKs and VDFs. We also developed proof-of-concept drop-in implementations that can be easily integrated with production-ready verifiable delay functions (VDFs) to provide secure, cost-effective, and straightforward integration of randomness for Ethereum's execution layer.

If you’re interested in exploring any of these topics, don’t hesitate to get in touch! You can reach us on Twitter at @amangotchu, @sina_eth_, and @gakonst.

Shoutout to Yi Sun and Jonathan Wang for introducing us to the concept of viable SNARK block hash oracles.

Acknowledgments: Yi Sun, Jonathan Wang, Dan Lee, Transmissions11, Dan Robinson, Maxim Vezenov, Kevaundray Wedderburn, Justin Drake

Graphics By: Achal Srinivasan

Disclaimer: This post is for general information purposes only. It does not constitute investment advice or a recommendation or solicitation to buy or sell any investment and should not be used in the evaluation of the merits of making any investment decision. It should not be relied upon for accounting, legal or tax advice or investment recommendations. This post reflects the current opinions of the authors and is not made on behalf of Paradigm or its affiliates and does not necessarily reflect the opinions of Paradigm, its affiliates or individuals associated with Paradigm. The opinions reflected herein are subject to change without being updated.